Programming Massively Parallel Processors Chapter3 Exercises (with personal answers)

Chapter structure:

Chapter 3. Scalable parallel execution

3.2 Mapping Threads to Multidimensional Data

3.3 Image Blur: A More Complex Kernel

3.4 Synchronization and Transparent Scalability

3.6 Querying Device Properties

3.7 Thread Scheduling and Latency Tolerance

Summary

Before the exercises, here is the summary of Chapter 3 (Scalable Parallel Execution), which is directly pasted from the "3.8 Summary"

The kernel execution configuration parameters define the dimensions of a grid and its blocks. Unique coordinates in blockIdx and threadIdx allow threads of a grid to identify themselves and their domains of data. It is the responsibility of the programmer to use these variables in kernel functions so that the threads can properly identify the portion of the data to process. This model of programming compels the programmer to organize threads and their data into hierarchical and multidimensional organizations.

Once a grid is launched, its blocks can be assigned to SMs in an arbitrary order, resulting in the transparent scalability of CUDA applications. The transparent scalability comes with a limitation: threads in different blocks cannot synchronize with one another. To allow a kernel to maintain transparent scalability, the simple method for threads in different blocks to synchronize with each other is to terminate the kernel and start a new kernel for the activities after the synchronization point.

Threads are assigned to SMs for execution on a block-by-block basis. Each CUDA device imposes a potentially different limitation on the amount of resources available in each SM. Each CUDA device sets a limit on the number of blocks and the number of threads each of its SMs can accommodate, whichever becomes a limitation first. For each kernel, one or more of these resource limitations can become the limiting factor for the number of threads that simultaneously reside in a CUDA device.

Once a block is assigned to an SM, it is further partitioned into warps. All threads in a warp have identical execution timing. At any time, the SM executes instructions of only a small subset of its resident warps. This condition allows the other warps to wait for long-latency operations without slowing down the overall execution throughput of the massive number of execution units.

Several additional takeaways:

a grid is a three-deminsional array of blocks, and each block is a three-dimensional array of threads

dim3 type is a C struct with three unsigned integer fields: x, y and z, which specify the sized of the three dimensions

CUDA C uses the row-major layout rather than the column-major layout, while BLAS(Basic Linear Algebra Subprograms) uses column-major layout. (usually instruct the users to transpose the input arrays if the call these libs from C programs)

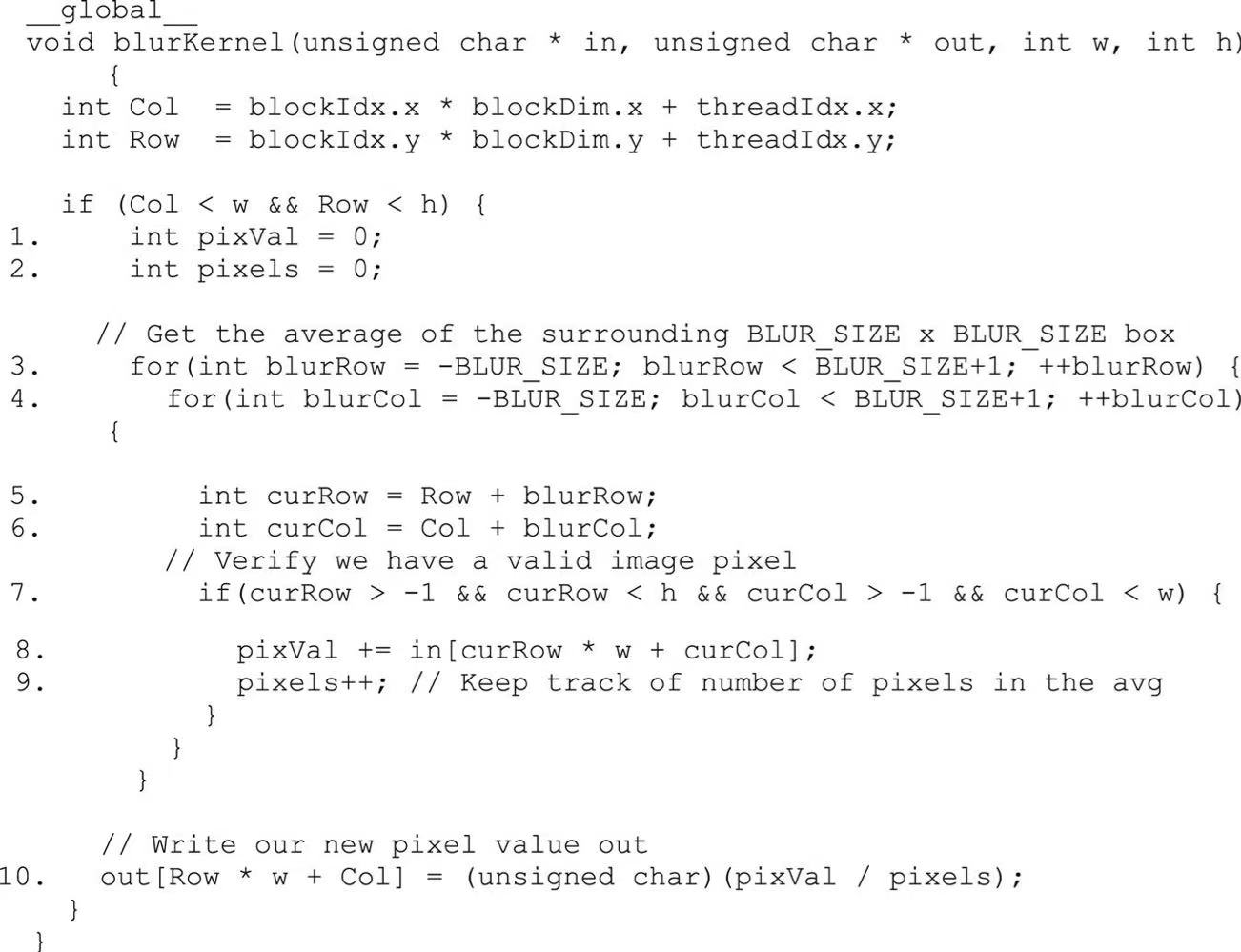

Image Blur Example:

Threads in the same block are allowed to coordinate their activities by using __syncthreads() , which is a barrier synchronization function. (threads should execute in close temporal proximity with each other to avoid excessively long waiting times, which is the responsibility of our programmers)

Device properties can be queried like this:

#include <stdio.h>

#include <cuda_runtime.h>

int main() {

int deviceCount;

cudaGetDeviceCount(&deviceCount);

printf("Number of CUDA devices: %d\n\n", deviceCount);

for (int i = 0; i < deviceCount; i++) {

cudaDeviceProp props;

cudaGetDeviceProperties(&props, i);

printf("Device %d: %s\n", i, props.name);

printf("Compute capability: %d.%d\n", props.major, props.minor);

printf("Memory: %.2f GB\n", props.totalGlobalMem / (1024.0 * 1024.0 * 1024.0));

printf("Max threads per block: %d\n", props.maxThreadsPerBlock);

printf("Max grid dimensions: (%d, %d, %d)\n",

props.maxGridSize[0], props.maxGridSize[1], props.maxGridSize[2]);

printf("Max block dimensions: (%d, %d, %d)\n",

props.maxThreadsDim[0], props.maxThreadsDim[1], props.maxThreadsDim[2]);

printf("Warp size: %d\n\n", props.warpSize);

printf("SM count: %d\n\n", props.multiProcessorCount);

printf("clock rate (MHz): %d\n\n", props.clockRate/1000);

}

return 0;

}ubuntu commands (after save this file as check_gpu.cu):

nvcc check_gpu.cu -o check_gpu

./check_gu

zero-overhead warp scheduling / latency tolerance: the content switching of warps in the same SM cost nothing based on the hardware setting. (When an instruction to be executed by a warp needs to wait for the result of a previously initiated long-latency operation, the warp is not selected for execution. Instead, another resident warp that is no longer waiting for results will be selected for execution. If more than one warp is ready for execution, a priority mechanism is used to select one for execution.)

Exercises

1. Matrix Addition

A matrix addition takes two input matrices A and B and produces one output matrix C. Each element of the output matrix C is the sum of the corresponding elements of the input matrices A and B, i.e., C[i][j]=A[i][j] +B[i][j]. For simplicity, we will only handle square matrices whose elements are single-precision floating-point numbers. Write a matrix addition kernel and the host stub function that can be called with four parameters: pointer-to-the-output matrix, pointer-to-the-first-input matrix, pointer-to-the-second-input matrix, and the number of elements in each dimension. Follow the instructions below:

A. Write the host stub function by allocating memory for the input and output matrices, transferring input data to device; launch the kernel, transferring the output data to host and freeing the device memory for the input and output data. Leave the execution configuration parameters open for this step.

B. Write a kernel that has each thread to produce one output matrix element. Fill in the execution configuration parameters for this design.

C. Write a kernel that has each thread to produce one output matrix row. Fill in the execution configuration parameters for the design.

D. Write a kernel that has each thread to produce one output matrix column. Fill in the execution configuration parameters for the design.

E. Analyze the pros and cons of each kernel design above.

#include <iostream>

#include <cuda_runtime.h>

#include <vector>

#include <cstdlib>

// Kernel declarations (we'll define them later):

__global__ void matrixAddElementwise(float* C, const float* A, const float* B, int N);

__global__ void matrixAddRow(float* C, const float* A, const float* B, int N);

__global__ void matrixAddColumn(float* C, const float* A, const float* B, int N);

// Host function for performing matrix addition

void matrixAddHost(float* hC, const float* hA, const float* hB, int N, int mode)

{

// 1. Allocate device memory

float *dA, *dB, *dC;

size_t size = N * N * sizeof(float);

cudaMalloc((void**)&dA, size);

cudaMalloc((void**)&dB, size);

cudaMalloc((void**)&dC, size);

// 2. Copy host data (A, B) to device

cudaMemcpy(dA, hA, size, cudaMemcpyHostToDevice);

cudaMemcpy(dB, hB, size, cudaMemcpyHostToDevice);

// 3. Launch the kernel.

// mode: 0 -> each thread handles 1 element

// 1 -> each thread handles 1 row

// 2 -> each thread handles 1 column

dim3 blockDim, gridDim;

switch(mode)

{

case 0:

// (B) Each thread produces one output matrix element

// Choose an execution config. We'll fill in in part (B).

// For example, we can use a 2D grid/block:

blockDim = dim3(16, 16);

gridDim = dim3((N + blockDim.x - 1) / blockDim.x,

(N + blockDim.y - 1) / blockDim.y);

matrixAddElementwise<<<gridDim, blockDim>>>(dC, dA, dB, N);

break;

case 1:

// (C) Each thread produces one output matrix row

// Fill in in part (C).

blockDim = dim3(256);

gridDim = dim3((N + blockDim.x - 1) / blockDim.x);

matrixAddRow<<<gridDim, blockDim>>>(dC, dA, dB, N);

break;

case 2:

// (D) Each thread produces one output matrix column

// Fill in in part (D).

blockDim = dim3(256);

gridDim = dim3((N + blockDim.x - 1) / blockDim.x);

matrixAddColumn<<<gridDim, blockDim>>>(dC, dA, dB, N);

break;

default:

std::cerr << "Invalid mode\n";

break;

}

// 4. Copy the result C back to host

cudaMemcpy(hC, dC, size, cudaMemcpyDeviceToHost);

// 5. Free device memory

cudaFree(dA);

cudaFree(dB);

cudaFree(dC);

}

__global__ void matrixAddElementwise(float* C, const float* A, const float* B, int N)

{

// 2D indices based on 2D grid/block arrangement

int col = blockIdx.x * blockDim.x + threadIdx.x;

int row = blockIdx.y * blockDim.y + threadIdx.y;

// Check boundaries

if (row < N && col < N)

{

int index = row * N + col;

C[index] = A[index] + B[index];

}

}

__global__ void matrixAddRow(float* C, const float* A, const float* B, int N)

{

// Each block + thread combination yields a single row index

int row = blockIdx.x * blockDim.x + threadIdx.x;

if (row < N)

{

int rowStart = row * N; // starting index of row

for (int col = 0; col < N; col++)

{

int idx = rowStart + col;

C[idx] = A[idx] + B[idx];

}

}

}

__global__ void matrixAddColumn(float* C, const float* A, const float* B, int N)

{

// Each block + thread combination yields a single column index

int col = blockIdx.x * blockDim.x + threadIdx.x;

if (col < N)

{

// For each row in this column

for (int row = 0; row < N; row++)

{

int idx = row * N + col;

C[idx] = A[idx] + B[idx];

}

}

}

int main(int argc, char** argv)

{

// 1. Parse command-line arguments

// We'll expect up to 2 arguments:

// argv[1] = N (dimension), argv[2] = mode (0=elementwise, 1=row-based, 2=column-based)

int N = 1024; // default size

int mode = 0; // default mode

// If user provided at least 1 argument, parse N

if (argc > 1) {

N = std::atoi(argv[1]);

}

// If user provided 2 arguments, parse mode

if (argc > 2) {

mode = std::atoi(argv[2]);

}

// 2. Allocate and initialize host matrices A, B, C

std::vector<float> A(N*N), B(N*N), C(N*N, 0.0f);

// Fill A and B with some values for testing

for (int i = 0; i < N*N; ++i)

{

A[i] = static_cast<float>(i % 100);

B[i] = static_cast<float>(i % 50);

}

// 3. Perform matrix addition in the chosen mode

// mode: 0 => elementwise, 1 => row-based, 2 => column-based

matrixAddHost(C.data(), A.data(), B.data(), N, mode);

// 4. (Optional) Verify correctness for a small subset of elements

for (int i = 0; i < 5; i++)

{

std::cout << "C[" << i << "] = " << C[i]

<< " (should be A[" << i << "] + B[" << i << "] = "

<< (A[i] + B[i]) << ")\n";

}

return 0;

}

2. Matrix-vector multiplication

A matrix–vector multiplication takes an input matrix B and a vector C and produces one output vector A. Each element of the output vector A is the dot product of one row of the input matrix B and C, i.e., A[i]=∑j B[i][j]+C[j]. For simplicity, we will only handle square matrices whose elements are single-precision floating-point numbers. Write a matrix–vector multiplication kernel and a host stub function that can be called with four parameters: pointer-to-the-output matrix, pointer-to-the-input matrix, pointer-to-the-input vector, and the number of elements in each dimension. Use one thread to calculate an output vector element.

3.

If the SM of a CUDA device can take up to 1536 threads and up to 4 thread blocks. Which of the following block configuration would result in the largest number of threads in the SM?

A. 128 threads per block

B. 256 threads per block

C. 512 threads per block

D. 1024 threads per block

Answer: B

4.

For a vector addition, assume that the vector length is 2000, each thread calculates one output element, and the thread block size is 512 threads. How many threads will be in the grid?

A. 2000

B. 2024

D. 2048

D. 2096

5.

With reference to the previous question, how many warps do you expect to have divergence due to the boundary check on vector length?

A. 1

B. 2

C. 3

D. 6

Answer: A

6.

You need to write a kernel that operates on an image of size 400 ×900 pixels. You would like to assign one thread to each pixel. You would like your thread blocks to be square and to use the maximum number of threads per block possible on the device (your device has compute capability 3.0). How would you select the grid dimensions and block dimensions of your kernel?

7.

With reference to the previous question, how many idle threads do you expect to have?

8.

Consider a hypothetical block with 8 threads executing a section of code before reaching a barrier. The threads require the following amount of time (in microseconds) to execute the sections: 2.0, 2.3, 3.0, 2.8, 2.4, 1.9, 2.6, and 2.9 and to spend the rest of their time waiting for the barrier. What percentage of the total execution time of the thread is spent waiting for the barrier?

9.

Indicate which of the following assignments per multiprocessor is possible. In the case where it is not possible, indicate the limiting factor(s).

A. 8 blocks with 128 threads each on a device with compute capability 1.0

B. 8 blocks with 128 threads each on a device with compute capability 1.2

C. 8 blocks with 128 threads each on a device with compute capability 3.0

D. 16 blocks with 64 threads each on a device with compute capability 1.0

E. 16 blocks with 64 threads each on a device with compute capability 1.2

F. 16 blocks with 64 threads each on a device with compute capability 3.0

10.

A CUDA programmer says that if they launch a kernel with only 32 threads in each block, they can leave out the __syncthreads() instruction wherever barrier synchronization is needed. Do you think this is a good idea? Explain.

11.

A student mentioned that he was able to multiply two 1024 ×1024 matrices by using a tiled matrix multiplication code with 32 ×32 thread blocks. He is using a CUDA device that allows up to 512 threads per block and up to 8 blocks per SM. He further mentioned that each thread in a thread block calculates one element of the result matrix. What would be your reaction and why?